Usage

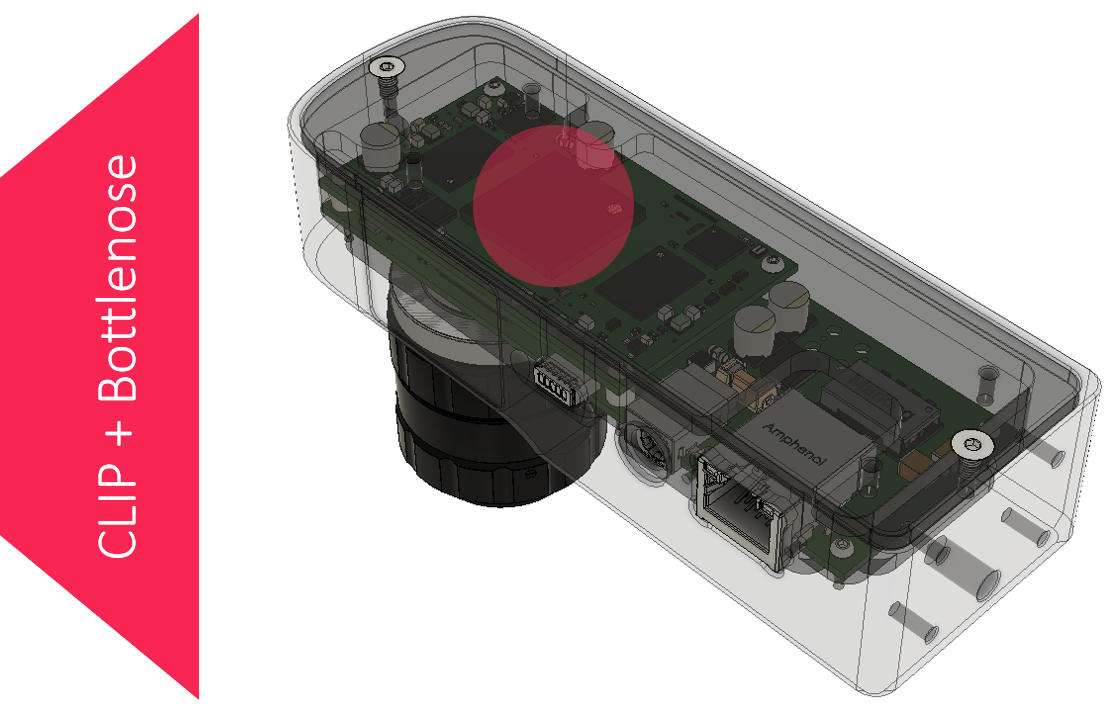

This usage document is for the 2nd generation AI capability where CLIP from OpenAI has been ported to on-camera hardware accelerators on Bottlenose.

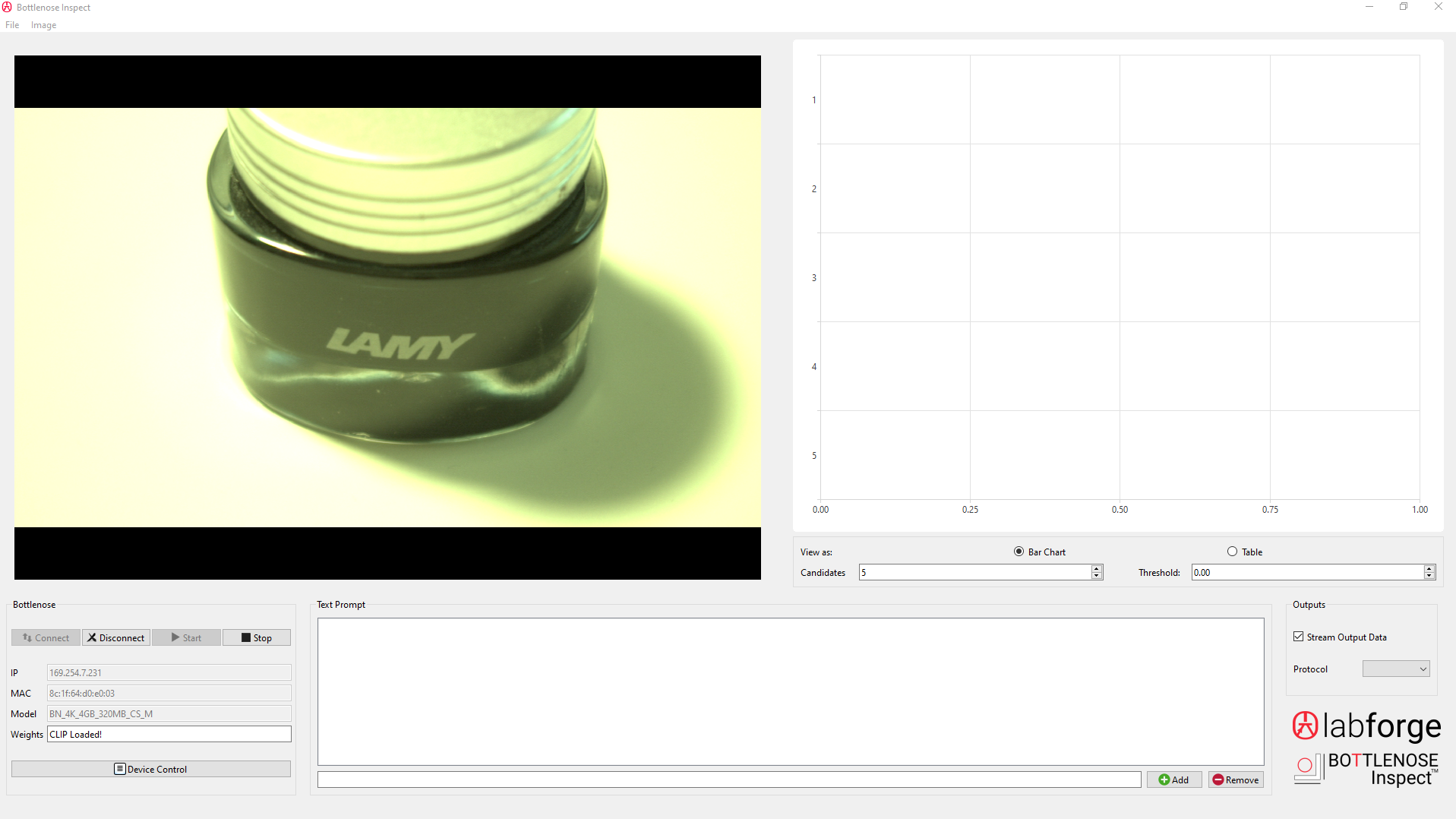

Click Connect and select the camera. Press Start to start streaming.

Open the aperture on the lens all the way and use the focus ring to focus the image. You should see a live camera feed with an over exposed image as below.

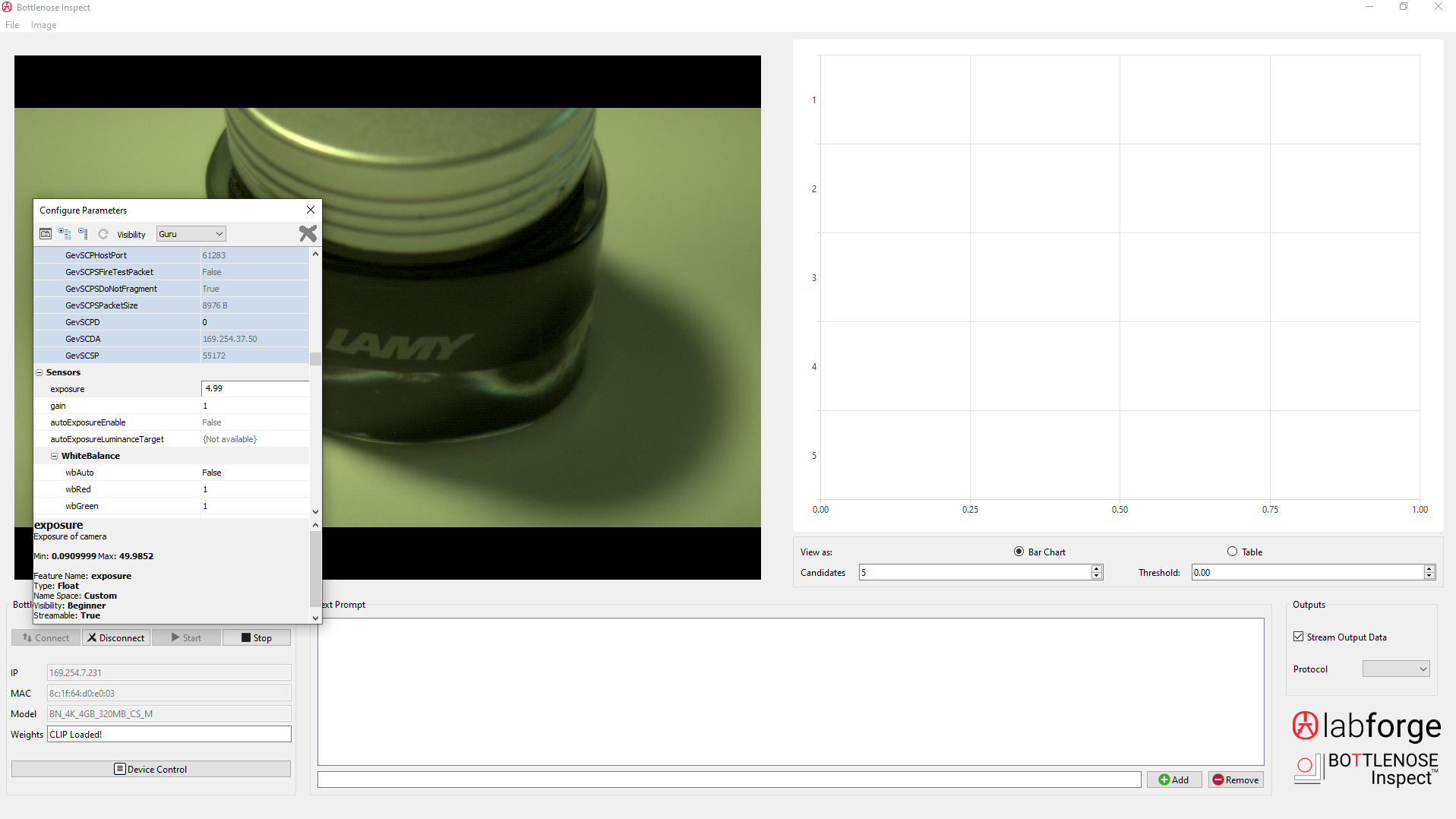

Click Device Control, switch to 'Guru' visibility from the top and change exposure value in milliseconds to lower the image brightness.

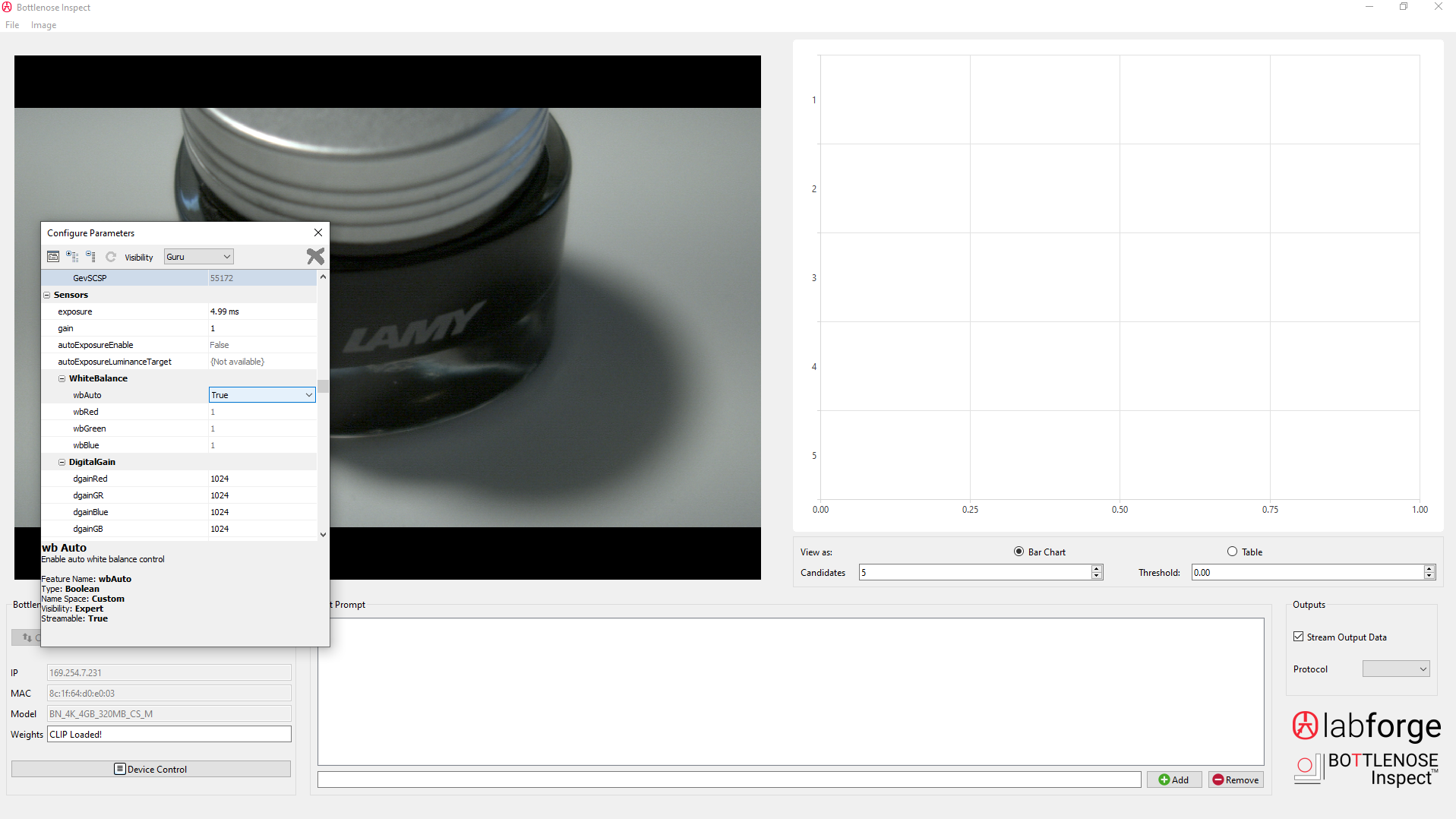

Turn white balance on:

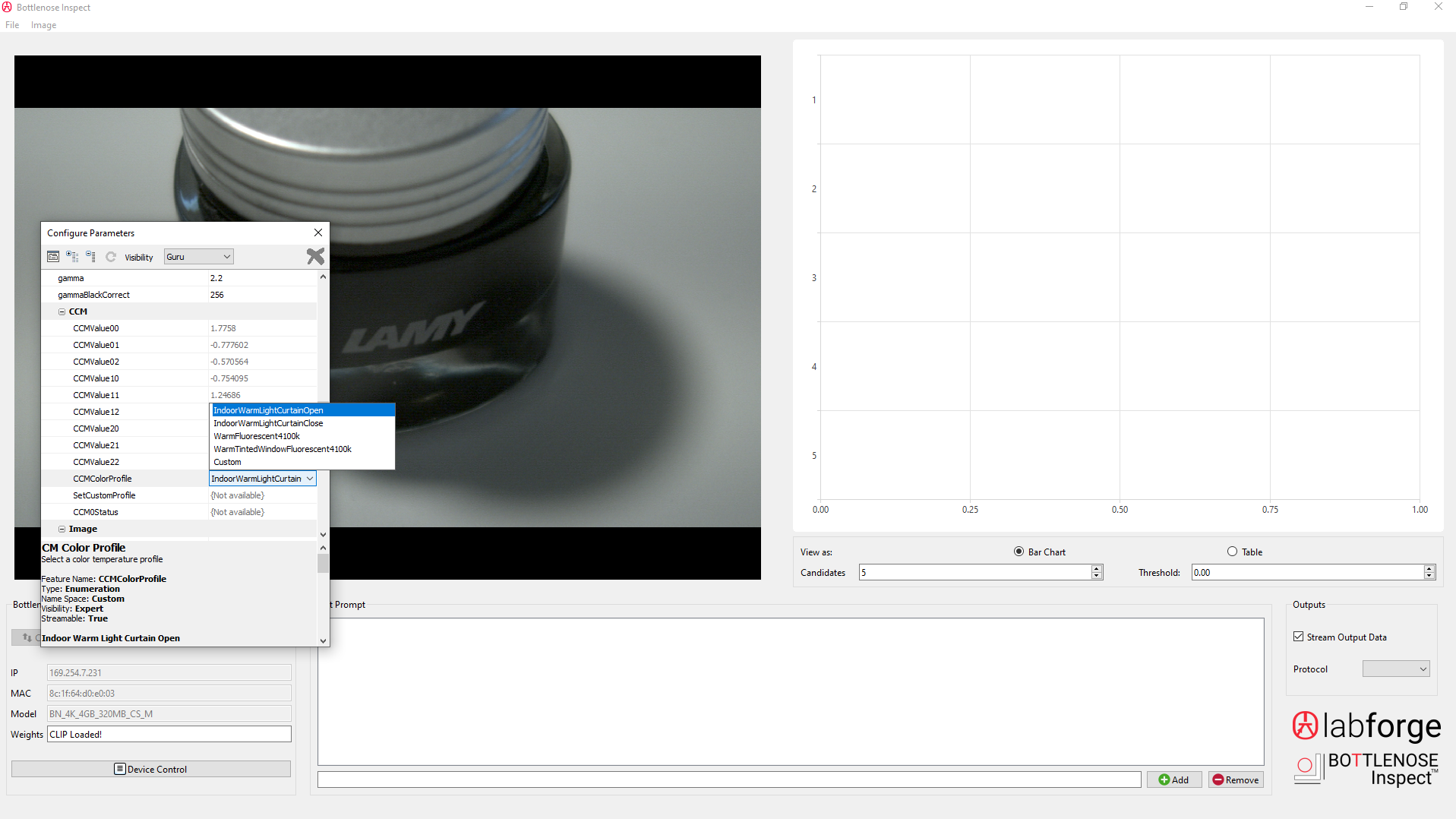

Pick a color profile that suits your environment:

For more details and settings, please refer to the Imaging section.

Ensure that it says CLIP Loaded! at the bottom. This confirms that the CLIP AI model has been loaded onto the on-camera cluster of DSPs and DNN block for processing.

Classifying via Text

You can now enter text prompts at the bottom and press Enter or Add. AI will now try to match the prompts with what the camera is seeing.

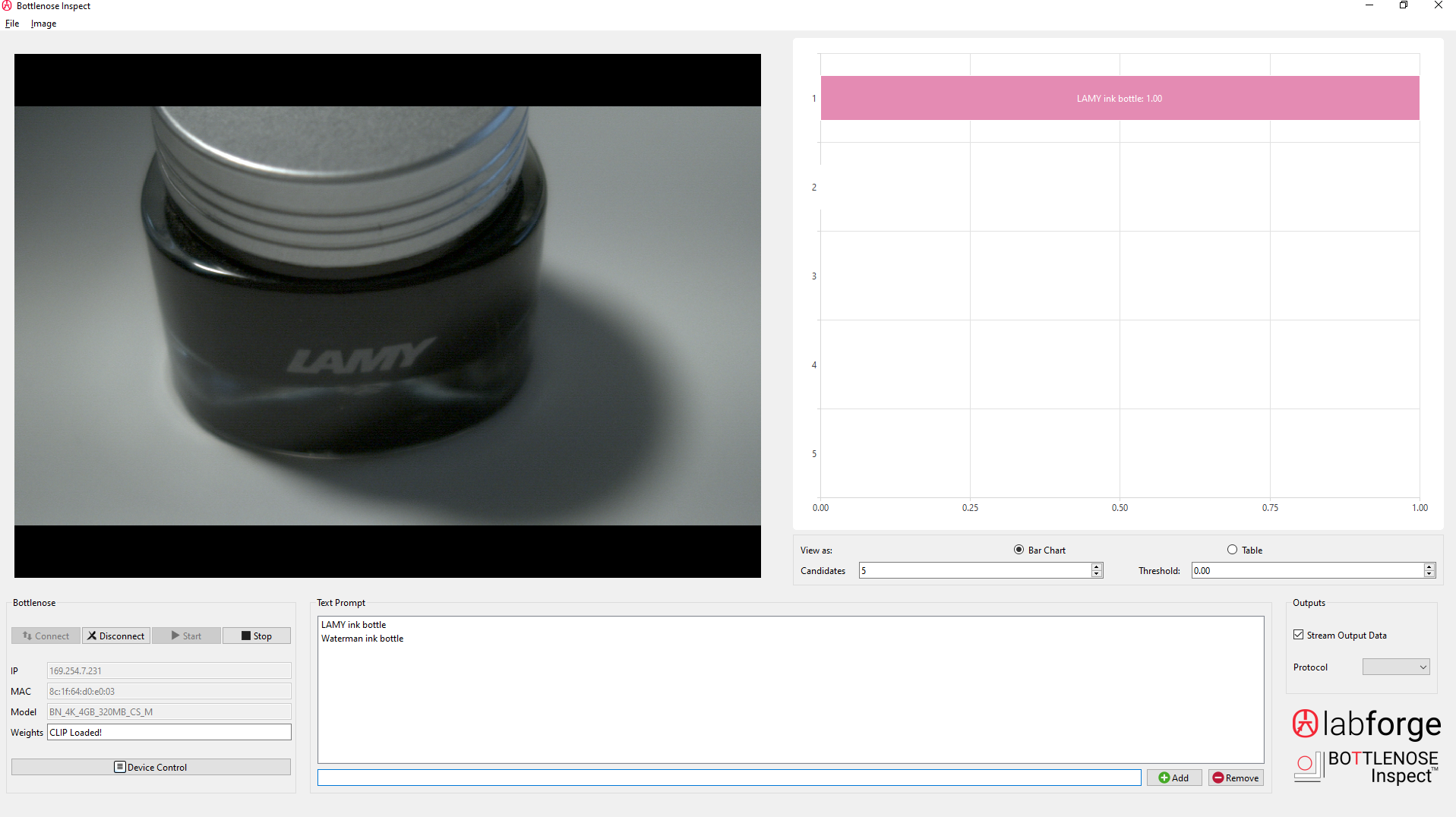

For example, lets say the use case is to determine a LAMY ink bottle vs a Waterman ink bottle. This can be useful in presence / absence applications where packaging or industrial assembly may need verification. In the past, this capability of detecting LAMY vs Waterman would require collecting a custom dataset, training, and deployment.

We can now skip that process and enter 2 text prompts, 'LAMY ink bottle' and 'Waterman ink bottle' as shown below.

The bar chart on the top right shows the top match.

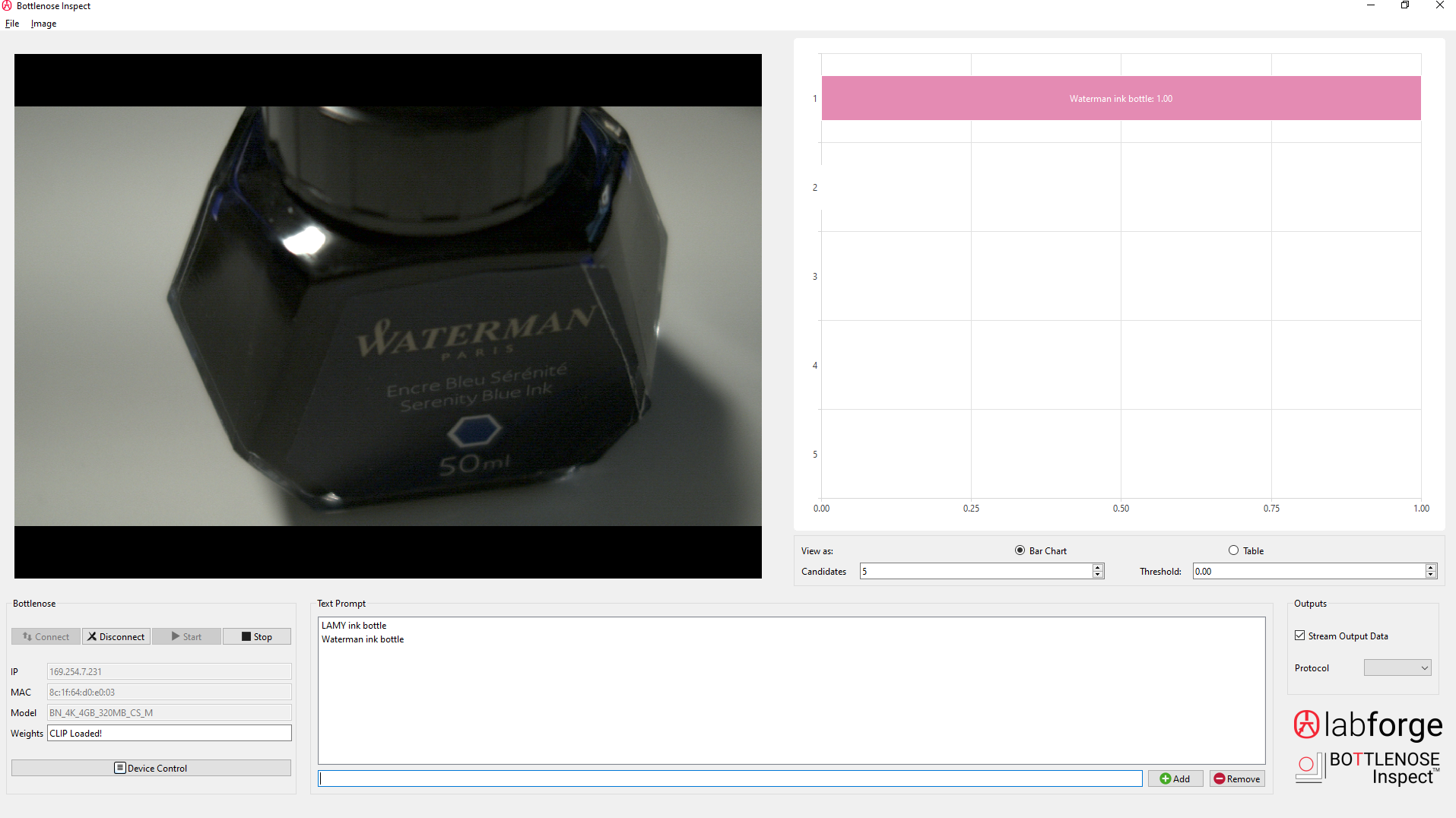

Switching to Waterman:

Success!

The application tells us that we have now switched to Waterman.

This demo can be done interactively by allowing viewers to put random objects in front of the camera. Let the users type any prompts they wish to test using the external keyboard and monitor.

Updated 4 months ago