Feature Detection

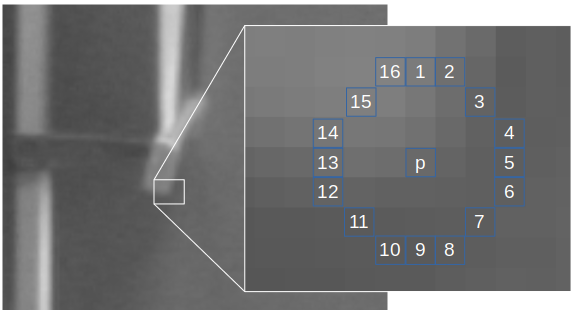

Feature points and their optical flow computed on Bottlenose.

Overview

Bottlenose cameras comes with two feature point detectors: FAST (Features from accelerated segment test) and GFTT (Good Features To Track). Feature points are also referred to as keypoints or corners.

FAST

The FAST algorithm was introduced as a high-speed corner detector. For a given input intensity image, Bottlenose uses the following algorithm to compute feature points:

- Select a pixel

pin the image. - Consider a circle of 16 pixels centered around pixel

p. - If at least 9 contiguous pixels in the circle of 16 pixels have an intensity difference with

pgreater than a given threshold value, pixelpis declared as a corner. - If needed, apply a non-maximum suppression algorithm to filter multiple adjacent corners.

Refer to the original paper, by Rosten and Drummond ECCV'06, for more details on the FAST algorithm.

Bottlenose uses FAST to determine a feature point. This image shows a pixel pand the surrounding circle of 16 pixels used to determine whether p is a corner.

GFTT

Besides the FAST detector, Bottlenose can also generate features based on the well-known good features to track work by J. Shi and C. Tomasi, CVPR'94. For a given input intensity image, Bottlenose uses the following algorithm to detect good features to track:

- calculates the corner quality measure for each pixel using either the minimum eigenvalue or the Harris detector method, if so specified.

- determines a threshold value computed from a set quality level and the maximum of the minimum eigenvalue image.

- applies a non-maximum suppression on the detected corners.

- filters the corners to reject corners with an intensity value greater than the computed threshold.

- orders the remaining corners in descending order

- throws away each corner for which there is a stronger corner at a distance less than a defined minimum distance

Enabling Feature Point Detection

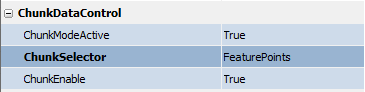

Bottlenose detects and transmits feature points through the chunk data interface of its GigE Vision protocol. The following steps show how to enable feature points with either the Bottlenose Stereo Viewer or eBusPlayer.

- Launch

Stereo VieweroreBusPlayerand connect to the camera - Click on

Device Controlto open the control panel - Navigate to

ChunkDataControl - Set

ChunkActiveModetoTrueto turn on chunk data transfer - Set

ChunkSelectortoFeaturePointsto activate feature points - Finally, set

ChunkEnabletoTrueto enable the feature point detection and transmission

Feature Point Chunk Data Settings

Feature Point Parameters

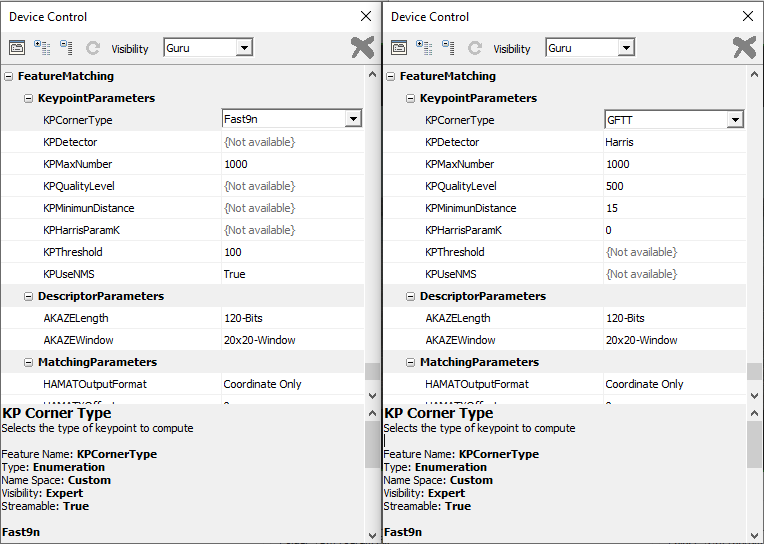

Bottlenose exposes various parameters that can be used to influence the way it detects feature points using either FAST or GFTT. To access these parameters, navigate to KeypointParameters under the FeatureMacthing section. You may need to set Visibility to Guru to access these settings.

FAST and GFTT settings exposed by a Bottlenose camera.

Here is a brief description of each parameter:

- KPCornerType: determines the feature points detector to use, FAST or GFTT.

- KPDetector: available only for GFTT. Choose between Harris and minimum eigenvalue as an underlying detector for GFTT.

- KPMaxNumber: Maximum number of feature points to output. This can be up to

65535for FAST and8192for GFTT. - KPQualityLevel: Quality level used by GFTT to threshold feature points. Bottlenose computes a threshold

tusing the maximum of the minimum eigenvalue using the equationt = max_value x (quality_level/1023). Thus, the number of points detected decreases as the quality level increases. - KPMinimumDistance: Determines the minimum Euclidean distance between two feature points. Available only for GFTT.

- KPHarrisParamK: is the free parameter of the Harris corner detector. This parameter is ignored if the GFTT detector is not Harris.

- KPThreshold: Pixel value threshold for the FAST algorithm.

- KPUseNMS: if set to

True, FAST applies non-maximum suppression to filter redundant feature points.

Receiving Feature Points with Python

Feature points can be streamed from Bottlenose using Python. For details on how to interact with the camera and stream feature point data, refer to our sample code.

The following code snippet activates the chunk mode and enables the transmission of feature points.

# Get device parameters from the Gige Vision device

device_params = device.GetParameters()

# Activate chunk mode

chunkMode = device_params.Get("ChunkModeActive")

chunkMode.SetValue(True)

# Select Feature points

chunkSelector = device_params.Get("ChunkSelector")

chunkSelector.SetValue("FeaturePoints")

# Enable feature points chunk

chunkEnable = device_params.Get("ChunkEnable")

chunkEnable.SetValue(True)

Receiving Feature Points with ROS2

The ROS2 driver for Bottlenose has support for feature points. This feature can be enabled when you launch the node as in the following.

ros2 run bottlenose_camera_driver bottlenose_camera_driver_node --ros-args \

-p mac_address:="<MAC>" \

-p stereo:=true \

-p feature_points:=fast9 \

-p features_threshold:=10 This command will turn on the stereo acquisition and use FAST as the feature detector with a threshold of 10. All other image processing parameters will be set to their defaults. This node will then publish 4 topics:

image_colorandimage_color_1for the left and right color images, respectivelyfeaturesandfeatures_1an ImageMarker2D array of feature points from the left and right images.

Updated 4 months ago