AI Processing

Overview

Each Bottlenose camera is equipped with a deep neural network processing module. The module can process various networks including detection, segmentation, and classification networks. The AI module supports 16-bit floating point data processing and provides hardware acceleration for many layers. To meet the processing requirements, AI models must be converted to the internal representation of Bottlenose. Refer to our model repository for ready-to-use pre-trained models.

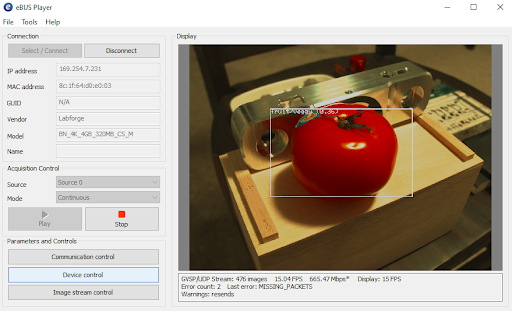

YoloV3 trained on fruits and vegetables, running on Bottlenose.

Enabling AI Processing

Bottlenose detects and transmits AI data through the chunk data interface of its GigE Vision protocol. For example , the following steps show how to enable bounding boxes streaming using either the Bottlenose Stereo Viewer or eBusPlayer.

- Launch

Stereo VieweroreBusPlayerand connect to the camera - Click on

Device Controlto open the control panel - Navigate to

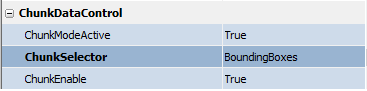

ChunkDataControl - Set

ChunkActiveModetoTrueto turn on chunk data transfer - Set

ChunkSelectortoBoundingBoxesto activate the bounding boxes chunk - Finally, set

ChunkEnabletoTrueto enable the transmission of bounding boxes if an object detector is loaded.

AI Bounding boxes Chunk Data Settings

AI Parameters

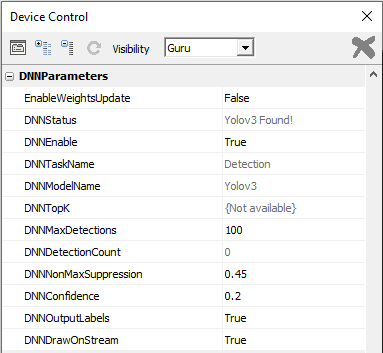

Bottlenose exposes a set of parameters that control the processing of AI models. These parameters are located under the DNNParameters subsection of the device control. Visibility may need to be set to Guru mode to access these settings.

A view of the AI settings showing a Yolov3 model loaded by a Bottlenose camera.

Here is a brief description of the commonly used settings:

- DNNStatus: displays status messages related to AI processing.

- DNNEnable: enables/disables AI processing.

- DNNTaskName: displays the name of the current AI task

- DNNModelName: displays the name of the currently running AI model

- DNNTopK: available only for classifiers. It determines the number of possible predictions generated by a classifier.

- DNNMaxDetections: sets the maximum number of detections the model should output.

- DNNDetectionCount: counts the number of objects detected in the current frame.

- DNNNonMaxSuppression: sets the threshold value for non-maximum suppression

- DNNConfidence: sets the confidence threshold for detected objects.

- DNNOutputLabels: decides whether to output labels as part of the message transmitted through chunk data.

- DNNDrawOnStream: decides if AI results should be drawn on the image stream.

Parameters such as DNNEnable can only be changed if the camera is not streaming. To change their values, make sure the camera is not streaming!

AI Processing with Python

Python can be used to programmatically upload models and stream AI data from any Bottlenose camera. For details on how to perform this, refer to our sample code.

The following code snippet activates the chunk mode and enables the transmission of bounding boxes.

# Get device parameters from the Gige Vision device

device_params = device.GetParameters()

# Activate chunk mode

chunkMode = device_params.Get("ChunkModeActive")

chunkMode.SetValue(True)

# Select bounding boxes as chunk

chunkSelector = device_params.Get("ChunkSelector")

chunkSelector.SetValue("BoundingBoxes")

# Enable bounding boxes transmission

chunkEnable = device_params.Get("ChunkEnable")

chunkEnable.SetValue(True)

AI Processing with ROS2

The ROS2 driver for Bottlenose has support for AI processing. This feature can be enabled when you launch the node as follows:

ros2 run bottlenose_camera_driver bottlenose_camera_driver_node --ros-args \

-p mac_address:="<MAC>" \

-p ai_model:=<absolute_path>yolov3_1_416_416_3.tar \

-p DNNConfidence:=0.25 The above command enables AI processing on Bottlenose. The model is Yolov3 with a confidence threshold of 0.25. You can use our Detection Visualizer tool to annotate the images with the detections and subscribe to the annotated images with Foxglove studio for instance. For more AI models, refer to our model repository.

Updated 4 months ago