Feature Matching

Overview

Matching feature points is the base operation for many computer vision operations such as tracking and SLAM. A Bottlenose stereo camera can match a list of feature points from the left image to another list of feature points from the right image. The Hamming distance computed on the descriptors is used to determine the similarity of feature points.

Bottlenose has a dedicated matching hardware module that calculates the Hamming distance between a source and a reference feature point, and this for all feature point combinations from the input list of feature points. The matching module then searches for the combinations with the shortest and next shortest Hamming distances and associates the source feature points with the corresponding reference feature points.

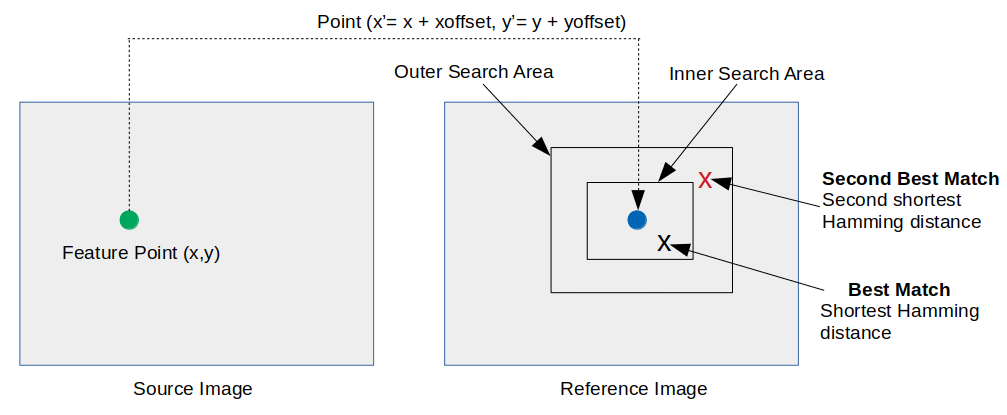

Given a source feature point p(x,y), Bottlenose search for the matching reference point by determining two nested search areas inside the reference image. The best match should fall within the inner search area, otherwise the point is marked as unmatched. The second-best match may, however, appear inside the outer search area. The sizes of these areas are settable parameters. The search areas are centered around a pixel with coordinated obtained by offsetting that of the source feature point. The offset parameters can be set to alter the search range.

Feature matching mechanism within Bottlenose

Enabling Feature Matching

Bottlenose computes and transmits matched feature points through the chunk data interface of its GigE Vision protocol. The following steps show how to enable feature matching with either the Bottlenose Stereo Viewer or eBusPlayer.

- Launch

Stereo VieweroreBusPlayerand connect to the camera - Click on

Device Controlto open the control panel - Navigate to

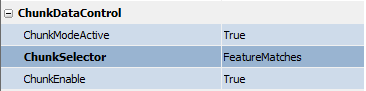

ChunkDataControl - Set

ChunkActiveModetoTrueto turn on chunk data transfer - Set

ChunkSelectortoFeatureMatchesto activate feature matching - Finally, set

ChunkEnabletoTrueto enable the feature-matching transmission

Feature matching chunk data settings.

Bottlenose will not transmit the associated feature points until explicitly required to. It's recommended to turn on feature points transmission to correlate them with the matches. Refer to the feature points page to learn how.

Feature Matching Parameters

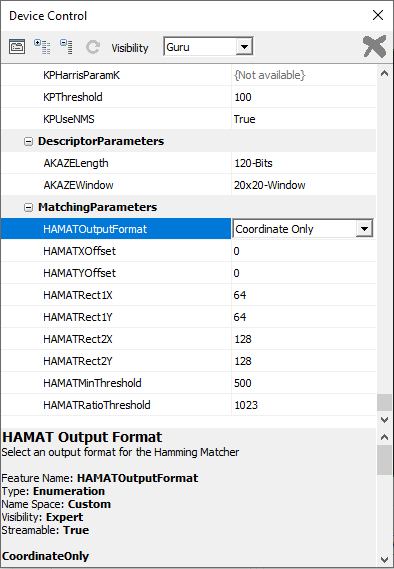

The list of parameters for the matching algorithm can be accessed by navigating to MatchingParamaters located under the FeatureMatching header.

A view of the Bottlenose feature matching parameters

Following is a description of each of the parameters:

- HAMATOutputFormat: Select the output format for the list of matching feature points. Bottlenose can generate matches as a list of indexes inside the list of reference feature points or as a list of image coordinates from the reference list. For each matched point, Bottlenose can be instructed to provide detailed information such as the next best match and the computed distances.

- HAMATXOffset and HAMATYOffset: The X and Y offsets are used to determine the search range inside the reference image.

- HAMATRect1X and HAMATRect1Y: represent the half-width and half-height of the inner search area.

- HAMATRect2X and HAMATRect2Y: represent the half-width and half-height of the outer search area..

- HAMATMinThreshold: The Hamming distance of the best matching point should be the smallest and less than a minimum threshold.

- HAMATRatioThreshold: Set the ratio threshold to make a clear distinction between the best match and the second best. A good match is found only when the ratio of the distance to the best match and the second-best match is less than a certain threshold. If the two distances are the same, Bottlenose assumes there is no good enough match.

Receiving Matches with Python

Feature matches can be streamed from Bottlenose using Python. For details on how to interact with the camera and stream matching data, refer to our sample code.

The following code snippet activates the chunk mode and enables the transmission of feature matches.

# Get device parameters from the Gige Vision device

device_params = device.GetParameters()

# Activate chunk mode

chunkMode = device_params.Get("ChunkModeActive")

chunkMode.SetValue(True)

# Select feature matches

chunkSelector = device_params.Get("ChunkSelector")

chunkSelector.SetValue("FeatureMatches")

# Enable feature matches chunk

chunkEnable = device_params.Get("ChunkEnable")

chunkEnable.SetValue(True)

Receiving Matches with ROS2

The ROS2 driver for Bottlenose has support for feature matches. This feature can be enabled when you launch the node as in the following.

ros2 run bottlenose_camera_driver bottlenose_camera_driver_node --ros-args \

-p mac_address:="<MAC>" \

-p stereo:=true \

-p feature_points:=fast9 \

-p features_threshold:=10

-p sparse_point_cloud:=true \

-p AKAZELength:=486 \

-p AKAZEWindow:=20 This command will turn on stereo acquisition and use FAST as the feature detector with a threshold of 10. All other image processing parameters will be set to their defaults. This node will publish a matches topic that carries the matched feature points.

Updated 4 months ago