Sparse Stereo

Overview

Once calibrated, a stereo Bottlenose camera can produce triangulated sparse 3D point cloud data. For this, Bottlenose extracts feature points from the left and right cameras using either FAST or GFTT. It then computes and matches AKAZE descriptors for each pair of left-right feature points. Correctly matched points are triangulated to generate the final point cloud.

During matching, Bottlenose uses the left camera as the source and the right camera as the reference image. Therefore, the sparse 3D point cloud generated is always aligned with the left camera. Refer to the feature matching page for details on how the feature points are matched.

Enable Sparse Point Cloud

When requested, a Bottlenose generates and transmits a sparse point cloud using the GigE Vision chunk data protocol. The following steps show how to turn on this feature using the Device Control function of eBusPlayer or Bottlenose Stereo Viewer.

- Launch

Stereo VieweroreBusPlayerand connect to the camera - Click on

Device Controlto open the control panel - Navigate to

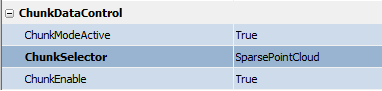

ChunkDataControl - Set

ChunkActiveModetotrueto turn on chunk data transfer - Set

ChunkSelectortoSparsePointCloudto enable the sparse point cloud mode - Finally, set

ChunkEnabletotrue.

Sparse point cloud settings viewed with eBusPlayer

Bottlenose uses the calibration parameters to compute 3D points. It's therefore important to make sure that the right calibration has been uploaded. Check the calibration page for details on how to calibrate the camera or the calibration parameters page to learn about the different ways to upload calibration data onto your Bottlenose camera.

Receiving Point Cloud with Python

The sparse 3D point cloud can be streamed from Bottlenose using Python. For details on how to interact with the camera and stream point cloud data, refer to our sample code. Ensure that you set the command-line parameter --offsety1 to the offset value used during calibration and that the calibration parameters have been updated.

The following code snippet activates the chunk mode and enables sparse point cloud transmission.

# Get device parameters from the Gige Vision device

device_params = device.GetParameters()

# Activate chunk mode

chunkMode = device_params.Get("ChunkModeActive")

chunkMode.SetValue(True)

# Select Sparse point cloud

chunkSelector = device_params.Get("ChunkSelector")

chunkSelector.SetValue("SparsePointCloud")

# Enable sparse pointcloud chunk

chunkEnable = device_params.Get("ChunkEnable")

chunkEnable.SetValue(True)

The above code snippet instructs the Bottlenose camera to attach a chunk data to any buffer it will send. In this case, the chunk data contains only a sparse point cloud, but may also carry other data if enabled. For example, the following function decodes the chunk data attached to the incoming buffer and returns a list of 3D points.

pointcloud = decode_chunk(device=device, buffer=pvbuffer, chunk='SparsePointCloud')Streaming Point Cloud with ROS

The ROS2 driver for Bottlenose has support for 3D sparse point cloud. This feature can be enabled when you launch the node as in the following. Ensure that the calibration parameters have been updated, and that OffsetY1 is set to the same value used for the calibration set.

ros2 run bottlenose_camera_driver bottlenose_camera_driver_node --ros-args \

-p mac_address:="<MAC>" \

-p stereo:=true \

-p feature_points:=fast9 \

-p features_threshold:=10

-p sparse_point_cloud:=true \

-p AKAZELength:=486 \

-p AKAZEWindow:=20

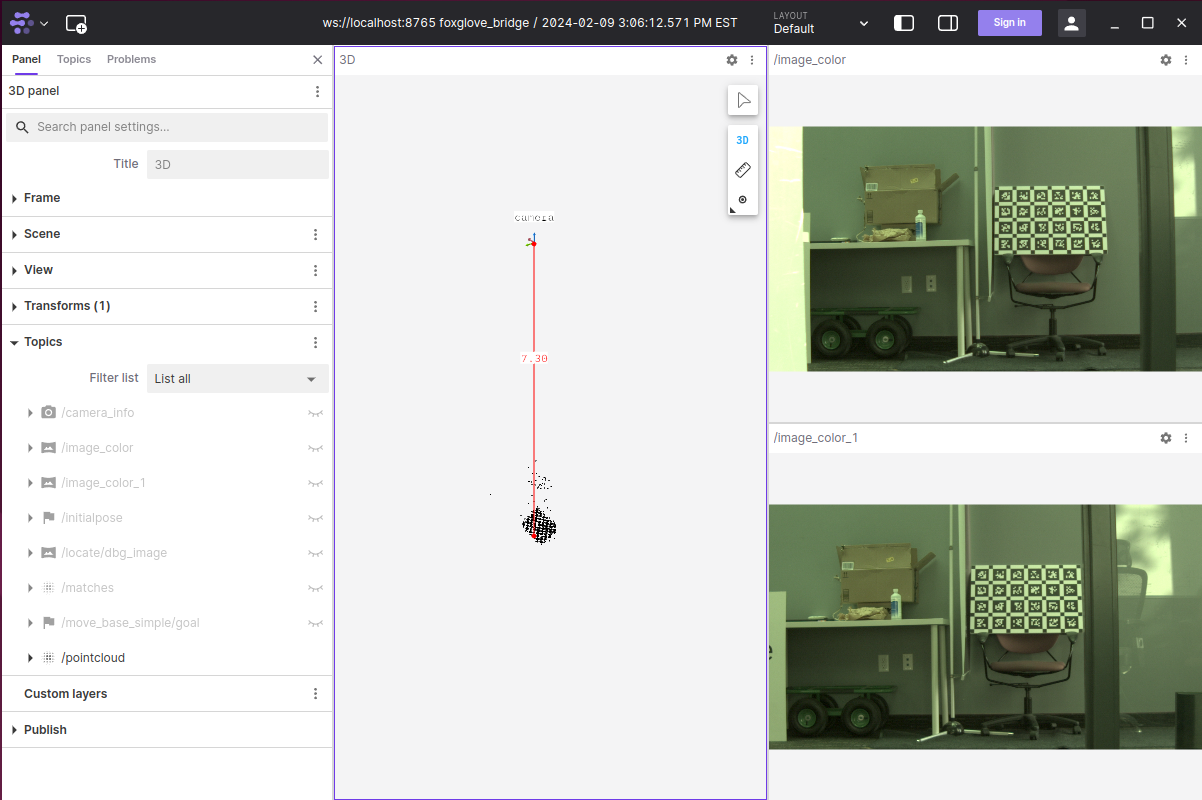

-p OffsetY1:=<offset used during calibration>This command will turn on the stereo acquisition and sparse point cloud, use FAST as the feature detector with a threshold of 10, and set the AKAZE descriptor length to 486 bits with a window size of 20. All other image processing parameters will be set to their defaults. This node will then publish three topics:

image_colorthat carries the left image colour imageimage_color_1for the right image, andpointcloudthat streams the generated PointCloud2 messages containing the 3D points from Bottlenose.

You can now subscribe to the sparse pointcloudtopic for visualization or further processing.

screenshot showing sparse point-cloud topic displayed with Foxglove

Updated 4 months ago